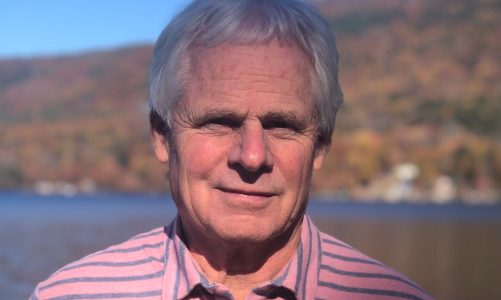

Darrell M. West is the Vice President of Governance Studies and Senior Fellow of the Center for Technology Innovation at the Brookings Institute. His research interests include artificial intelligence, polarization, policymaking, and the future of work. Prior to his position at the Brookings Institute, he taught at Brown University for twenty-six years and was the Director of the Taubman Center for Public Policy. Darrell M. West is the author of several books, including Turning Point: Policymaking in the Era of Artificial Intelligence (2020), Divided Politics, Divided Nation (2019), and The Future of Work: Robots, AI, and Automation (2018).

Nicole Chen: I want to start by taking you back in time to 2012, which is when you wrote your book “Digital Schools: How Technology Can Transform Education.” Almost a decade has passed now, and it’s particularly relevant in light of the pandemic and online schooling. You talk about some of the possibilities and great opportunities that come with technology in education. Has COVID-19 helped or hurt some of those possibilities by forcefully expediting the integration of the education system with tech?

Darrell M. West: COVID demonstrated some of the weaknesses of our current approaches to online education. First of all, there are still about 20% of Americans that are outside the technology revolution—they do not have access to broadband, can’t do video-streaming and don’t have access to the latest education resources. So, certainly, in terms of K-12 education, there are basic access problems that make it difficult for people to take advantage of online learning. But even for those who can engage in online learning, COVID basically forced everybody into online learning overnight in a way that many people were not prepared for. Teachers and professors were not prepared, schools and universities were not prepared, oftentimes the technology didn’t work as advertised and sometimes the broadband speeds were too slow; [All these factors made it] hard to be as engaging over a Zoom college call as it is with face-to-face interaction. In this way, although technology creates opportunities to broaden access to resources and give people access to expertise that may not be available in every geographical area, there clearly remain big problems in terms of access and implementation.

Will we see more integration of tech and school in the post-COVID world or will people revert back to the same traditional style of in-person school?

I don’t think we’re actually going to go back to the pre-COVID world, either in education, healthcare, e-commerce, or any other area. COVID forced what might have been five years of change into five months or five weeks. It really sped up the innovation cycle, and many of those innovations are going to stay. We will go back into the classroom for in-person education but there are still going to be online elements that are going to be important. Professors will still try to take advantage of the internet and people are integrating video-streaming into the education process. So many of the things we saw as the result of COVID over the last year are going to become permanent features of the landscape and we’re probably going to end up with some sort of hybrid model that’s part online and part in-person.

What are some of the greatest positives and downsides of artificial intelligence (AI)?

AI is the transformative technology of our time. It is being deployed in many different sectors and has lots of different applications, from healthcare and education to e-commerce, transportation, and national defense. It certainly brings a number of benefits—it can relieve humans of routine or tedious activities and free people for higher-level activities. It also can help promote greater human safety by alleviating people from dangerous activities, so there clearly are a number of strengths of AI.

But at the same time, there are a number of problems and dangers. We’ve already seen evidence of bias and inequity that has come into AI applications. AI is only as good as the data on which it is based and a lot of historic databases are either incomplete or unrepresentative. Relying on unrepresentative data produces unrepresentative algorithms, which then leads to unfair and outright biased outcomes. This has been a problem in the financial sector, where there has been some evidence of racial and/or gender biases in loan approvals. In facial recognition, AI algorithms are much more accurate at predicting the identities of Caucasian than Non-Caucasian individuals, in part because the data on which it is trained overrepresents Caucasians. So we have to be very careful as AI becomes more ubiquitous so that it does not create more problems in terms of fairness, equity, or bias. In [my co-author John Allen and my] book “Turning Point: Policymaking in the Era of Artificial Intelligence,” we looked at both the possible benefits and risks and presented a policy blueprint for dealing with some of those issues.

According to the Pew Research Center, roughly 60% of Americans believe they cannot go through daily life without having their data collected by corporations. It also said Americans are equally worried about the government collecting their data. Should Americans be as worried about the government as they are about corporations?

People should worry about both. They should worry about the government having access to their information, but they also should worry about private companies because private companies are using the information to market to you. Oftentimes the data you may provide to one company gets integrated with data you may provide to a second, third, fourth company, and before you know it—through data sharing and data integration—organizations have hundreds of data points on every single individual. That’s pretty much the situation that exists now. There is a commercial market where companies are basically selling people’s private information and if they have your cell phone number or your email address it’s easy to integrate different datasets. I worry about privacy invasion both from the government as well as business. There are different kinds of risks from each of those entities but both are highly problematic from a privacy standpoint.

When you say there are “different kinds of risks from each,” how exactly does government data collection pose a different risk than corporations?

Government data collection can end up suppressing people, eroding human rights, or creating safety problems for people. We certainly see that in many nations around the world, where technology has become a tool for suppression and mass surveillance. In “Turning Point: Policymaking in the Era of Artificial Intelligence,” we argue that we want technology to conform to basic human values. We want it to respect democratic rights and need to take action to make sure that is the case. Especially, since it is clearly not the case in many countries around the world. There is the risk that authoritarian models will take over from democratic models in the long run.

What exactly do you hope to see change policymaking-wise with regards to AI?

There’s lots of changes that need to be undertaken. In the bias and equity area, we need more inclusive development of technology. We need to create an environment where more people are able to gain the benefits of technology without suffering the downsides.

From a privacy standpoint, we need a national privacy law. Right now, there are some states that have recently adopted tougher laws, but many states either don’t have laws or have very weak laws, meaning that consumer protections are not very robust. We support a national privacy law that would basically make sure that technology does protect consumer privacy and the people’s confidential information gets protected.

In the international area, there needs to be international agreements and treaties to safeguard the use of AI. There are lots of ways in which AI is problematic and we need to handle AI in the same way we deal with other technologies. We impose guardrails on the use of chemical weapons and weapons of mass destruction and we have treaties that guide the development of new technologies that are considered harmful. We need to start thinking about global AI treaties which put some limits on how different countries are using it. You want to make sure that the technology conforms to basic human values and does not become a tool for suppression.

Is passing a national AI privacy law feasible?

I think it is quite feasible. Even the tech companies have called for a national privacy law because they want one set of standards across the United States. They actually don’t like a situation where the fifty states have differing rules, differing regulations, and differing requirements. You can’t really design an algorithm just for the state of Texas that doesn’t also apply in New York or California or Florida, so they want greater consistency in the rules. Many of the CEOs of leading tech companies have actually called on Congress to adopt a national privacy law, so they understand that consumers want that. If you look at public opinion data, concern for personal privacy is at the top of the list for many Americans. So there is a bipartisan coalition of legislators that are working on this.

That’s fantastic to hear, especially as bipartisanship lawmaking seems to be less and less common. How exactly do you feel that AI, and technology in general, has led to the current polarization?

Technology has made polarization worse because it is very easy to find like-minded people through online platforms. A lot of people are just creating their own little bubbles where their extremism and radicalization worsen due to the reinforcement they get from the people [in these online communities]. [These echo chambers] reinforce irrationality. We seem to be in a situation where fear and emotion are triumphing over facts and reason. Obviously, not all of those things are due to technology, but I do think that technology has made many of those problems worse.

So, in that respect, what exactly do you think it would take to really bridge the political divide that we’re currently seeing?

In my “Divided Politics, Divided Nation” book, I really argue that a lot of our polarization has developed in the last forty years due to structural problems in our economy as well as our society. This includes very high levels of income inequality, racial antagonism, and geographic disparities where most of the economic activity in America takes place on the East Coast and the West Coast and a few metropolitan areas in-between. Much of the heartland is being left behind and those people are seeing very few opportunities for themselves or for their children. So they’re lashing out and trying to find scapegoats for the fact that they have not done well over the forty year time period. If we really want to get serious about solving polarization, we have to deal with these underlying issues that are generating the animosity, the antagonism, and the anger. From my standpoint, it’s issues like income inequality and racial antagonism that are the bedrock issues [of conflict] so we need to make progress on those things before we can reduce extremism and polarization.

As someone who has spent their lifetime working in the realm of policymaking, what helps you remain hopeful for the future?

I am an optimistic person by nature. Even though we confront very serious issues right now, what gives me the most hope is young people. Young people are engaged with issues; they’re thinking about change, they’re worried about climate change, and they’re sensitive to issues of how different people interact with one another. The next decade is going to be a rocky time in American politics and there are lots of things that could go off the rails but, on a longer-term basis, America is changing in ways that are going to move the country in a more positive direction.

On the note of young people, what advice would you give those who are hoping to really go in and make a change in the political and/or social climate?

I encourage people to get involved in any way that they feel would be helpful. For some people, that would be public service, actually working for government agencies. We have a big retirement wave that is going through state and federal agencies so there is a lot of opportunity there and we need young people to take on those jobs. But, of course, not everybody likes government service. When I was at Brown, we had lots of students who were really interested in the non-profit world and that can certainly be a great vehicle for change when seeking to improve things. There’s also some people who should think about the private sector because corporations have great power in the United States and around the world and if you can change corporate practices, you can change the way society functions. So people need to figure out what particular path is going to be of greatest interest to them. There are opportunities to change the world in every sector, from public, non-profit to private.

We live in a world of megachange. There’s large-scale change that is taking place and so the world is going to look very different five, ten years from now. Young people are going to encounter a lot of change. Things that may bother you now, you may find resolve over the next ten years; there may be other problems that get worse a decade from now, but we definitely are not living in a static world. People should understand that things that may seem impossible today may not be impossible five or ten years from now.

*This interview has been edited for length and clarity